Sensor Fusion in Human Machine Interaction Systems and in Medicine

Human-computer interaction (HCI) is increasingly based on user inputs detected in speech, facial expressions or gestures from audio and video, not to mention physiological signals.

These kinds of user input can directly control the interface of a computer application. Another, more abstract problem considered here is to estimate the user's affective state from the user input.

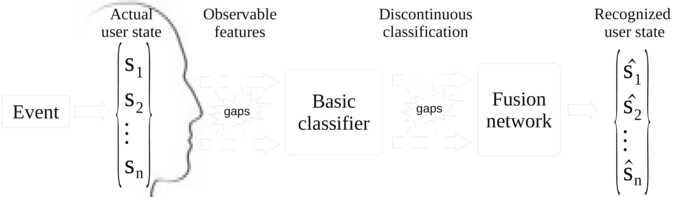

Real human-computer interaction (HMI) systems based on different modalities face the problem that not all information channels are always available at regular time steps. Nevertheless an estimation of the current user state is required at anytime to enable the system to interact instantaneously based on the available modalities. Novel approaches to decision fusion of fragmentary classifications are developed for audio, video and physiological signals.

The developed fusion framework based on Associative Memories and Markov Fusion Networks leads to an outstanding performance of the recognition of the affective user state.

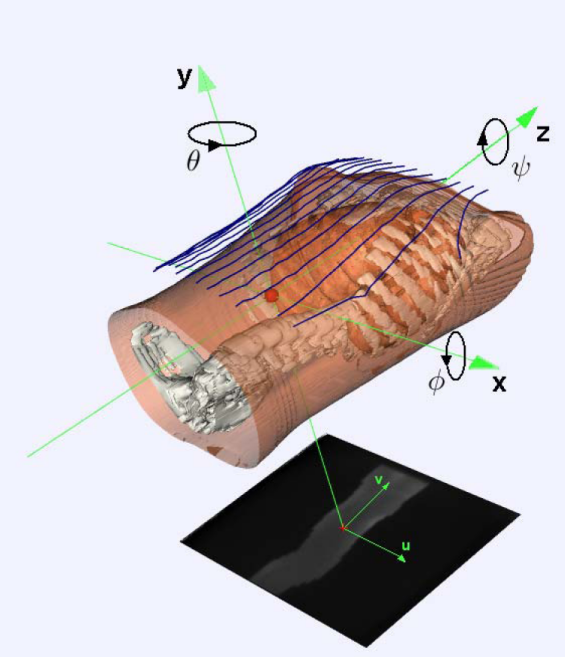

In medicine, usually different kinds of imaging devices are used for diagnosis and guidance in therapy. In radiation therapy for cancer treatment, for example, the correct and reproducible positioning of patients at the treatment machine (e.g. linear accelerator) as well as monitoring and control of the correct position during the treatment are important demands. The possibilities to gather information on position and motion of inner organs and bones during treatment are limited. Electronic Portal Images (EPI) are obtained by projecting the body interior of the patient onto a fluorescent screen by using a high energy treatment beam. It is commonly used by the clinicians to verify shape and location of the treatment beam with respect to the patient's anatomy.

Due to imaging physics, the unprocessed electronic portal images are very poor in dynamics and resolution compared to x-ray images produced for diagnostics and irradiation planning (simulator images).

Motion is principally only detectable in the parallel direction from the EPIs. To overcome this problem, optical 3-D surface sensors are developed and installed at the treatment machine enabling a fast acquisition of surface of patient's body. Correct positioning of the patient is verified by comparing the 3-D surface data obtained during irradiation with the body surface extracted from CT-data or with those produced in advance by an identical 3-D surface sensor at the simulator. Optical 3-D sensors can detect motion in the direction vertical to the projection plane of the portal image which is otherwise impossible.

This motivates the fusion of surface data obtained from the optical 3-D sensor with electronic portal images and CT data. The aim is to use all the available information for the motion analysis by combining position data of both sensors which are most accurate and expressive.

[67] N. Riefenstahl, G. Krell, M. Walke, B. Michaelis, and G. Gademann. Optical surface sensing and multimodal image fusion for position verification in radiotherapy. In International Conference on Medical Information Visualisation - MediVis2006 London, pages 21-26, 2006.

[84] Gerald Krell, Michael Glodek, Axel Panning, Ingo Siegert, Bernd Michaelis, Andreas Wendemuth, and Friedhelm Schwenker. Fusion of fragmentary classifier decisions for affective state recognition. In Morency Schwenker, Scherer, editor, Multimodal Pattern Recognition of Social Signals in Human Computer Interaction (MPRSS 2012), volume 7742 of Lecture Notes of Artificial Intelligence (LNAI), pages 116-130. Springer, Tsukuba Science City, Japan, November 11, 2012. [ .pdf ]

Contact: Gerald Krell